Installing LocalAI on AWS EC2 instance

Created EC2 instance AMI Linux based on CloudFormation template.

Connect to EC2 instance using SSH

$ sudo yum update Last metadata expiration check: 0:02:36 ago on Thu Sep 21 15:49:01 2023. Dependencies resolved. Nothing to do. Complete!$ sudo yum search docker Last metadata expiration check: 0:03:32 ago on Thu Sep 21 15:49:01 2023. =========================================================================================================== Name Exactly Matched: docker ============================================================================================================ docker.x86_64 : Automates deployment of containerized applications ============================================================================================================== Summary Matched: docker ============================================================================================================== amazon-ecr-credential-helper.x86_64 : Amazon ECR Docker Credential Helper ecs-service-connect-agent.x86_64 : ECS Service Connect Agent containing the proxy docker image nerdctl.x86_64 : nerdctl is a Docker-compatible CLI for containerd. oci-add-hooks.x86_64 : Injects OCI hooks as a Docker runtime$$ sudo yum info docker Last metadata expiration check: 0:04:33 ago on Thu Sep 21 15:49:01 2023. Available Packages Name : docker Version : 24.0.5 Release : 1.amzn2023.0.1 Architecture : x86_64 Size : 42 M Source : docker-24.0.5-1.amzn2023.0.1.src.rpm Repository : amazonlinux Summary : Automates deployment of containerized applications URL : http://www.docker.com License : ASL 2.0 and MIT and BSD and MPLv2.0 and WTFPL Description : Docker is an open-source engine that automates the deployment of any : application as a lightweight, portable, self-sufficient container that will : run virtually anywhere. : : Docker containers can encapsulate any payload, and will run consistently on : and between virtually any server. The same container that a developer builds : and tests on a laptop will run at scale, in production*, on VMs, bare-metal : servers, OpenStack clusters, public instances, or combinations of the above.$ sudo yum install -y dockerAdd group membership for the default ec2-user so you can run all docker commands without using the sudo command

$ sudo usermod -a -G docker ec2-user $ id ec2-user $ newgrp dockerCheck if Docker is installed

$ docker version Client: Version: 24.0.5 API version: 1.43 Go version: go1.20.7 Git commit: ced0996 Built: Thu Aug 31 00:00:00 2023 OS/Arch: linux/amd64 Context: default Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?Let's start Docker service and make it bootable

Enable docker service at AMI boot time

$ sudo systemctl enable docker.service Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.Start the Docker service

$ sudo systemctl start docker.service

How to control Docker service?

Use the systemctl command as follows:

$ sudo systemctl start docker.service #<-- start the service

$ sudo systemctl stop docker.service #<-- stop the service

$ sudo systemctl restart docker.service #<-- restart the service

$ sudo systemctl status docker.service #<-- get the service status

How to install docker-compose?

wget docker-compose for your version of OS ->

$ wget [https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname]

$ sudo mv docker-compose-$(uname -s)-$(uname -m) /usr/local/bin/docker-compose

$ sudo chmod -v +x /usr/local/bin/docker-compose

$ docker-compose version

Docker Compose version v2.22.0

How to install git?

$ sudo yum install -y git

Check if it is OK.

$ git -v

git version 2.40.1

How to install LocalAI?

Clone LocalAI with git

$ git clone https://github.com/go-skynet/LocalAI

$ cd LocalAI

Now, we have to create .env file.

NOTE: .env file has to be near (in the same folder) with docker-compose.yaml file.

## Set number of threads.

## Note: prefer the number of physical cores. Overbooking the CPU degrades performance notably.

THREADS=2

## Specify a different bind address (defaults to ":8080")

# ADDRESS=127.0.0.1:8080

## Default models context size

# CONTEXT_SIZE=512

#

## Define galleries.

## models will to install will be visible in `/models/available`

GALLERIES=[{"name":"model-gallery", "url":"github:go-skynet/model-gallery/index.yaml"}, {"url": "github:go-skynet/model-gallery/huggingface.yaml","name":"huggingface"}]

## CORS settings

# CORS=true

# CORS_ALLOW_ORIGINS=*

## Default path for models

#

MODELS_PATH=/models

## Enable debug mode

DEBUG=true

## Specify a build type. Available: cublas, openblas, clblas.

# Do not uncomment this as we are using CPU:

# BUILD_TYPE=cublas

## Uncomment and set to true to enable rebuilding from source

REBUILD=true

## Enable go tags, available: stablediffusion, tts

## stablediffusion: image generation with stablediffusion

## tts: enables text-to-speech with go-piper

## (requires REBUILD=true)

#

#GO_TAGS=tts

## Path where to store generated images

# IMAGE_PATH=/tmp

## Specify a default upload limit in MB (whisper)

# UPLOAD_LIMIT

# HUGGINGFACEHUB_API_TOKEN=Token here

docker-compose.yaml file

version: '3.6'

services:

api:

image: quay.io/go-skynet/local-ai:latest

build:

context: .

dockerfile: Dockerfile

tty: true

ports:

- 8080:8080

env_file:

- .env

volumes:

- ./models:/models:cached

command: ["/usr/bin/local-ai" ]

Finally, we can start LocalAI

$ docker-compose up -d --pull always

Let's check if LocalAI is running.

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

629aefae1095 quay.io/go-skynet/local-ai:latest "/build/entrypoint.s…" 15 minutes ago Up 15 minutes (unhealthy) 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp localai-api-1

Network changes

We can see that LocalAI runs on port :8080, therefore let's add this port to inbound rules for this EC2 instance.

This can be done by adding/modifying SecurityGroup > Inbound rules for this instance.

Working with LocalAI

The following check will give us information, on whether we added a port :8080 to inbound rules and same time will give us info about available models in LocalAI.

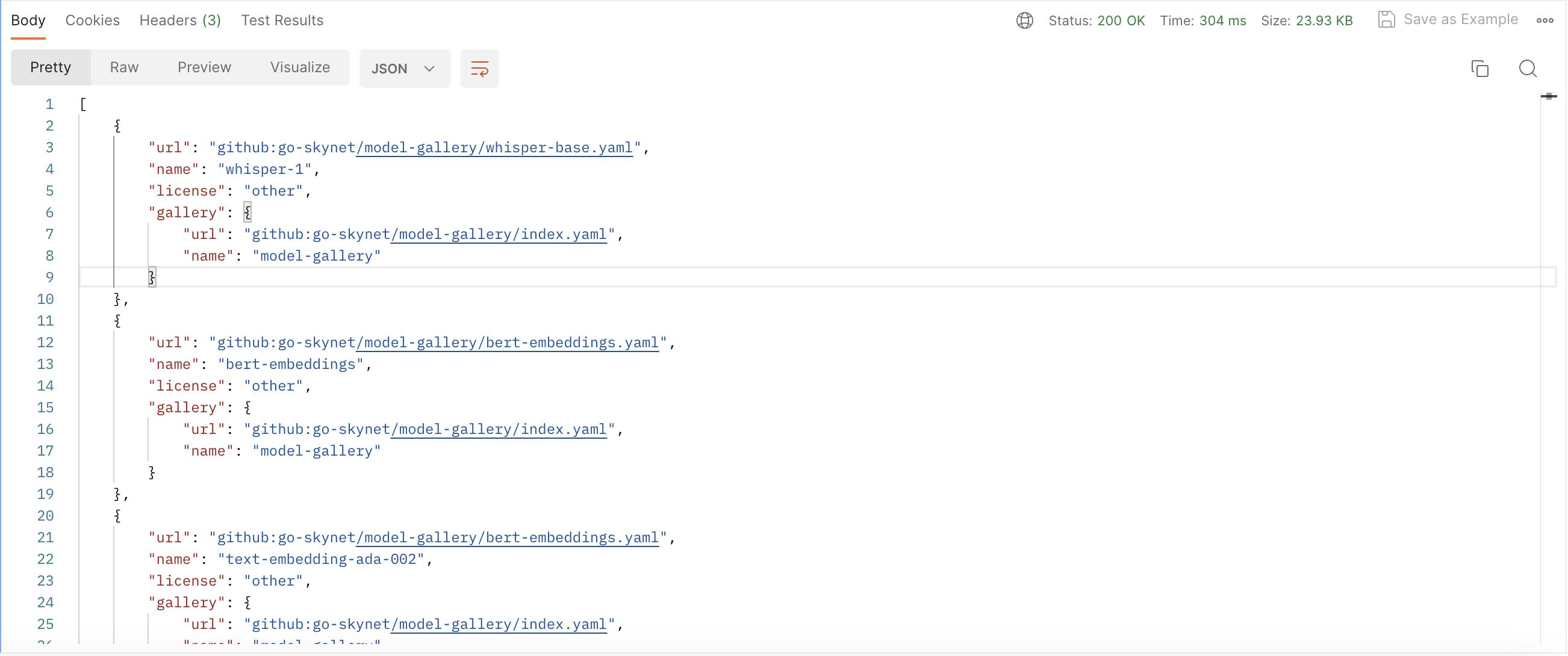

Start REST API client (e.g. POSTMAN) and trigger GET on following URL:

$ curl http://[EC2-INSTANCE-PUBLIC-IP]:8080/models/available

Response in this case would be similar to this

By default, Local.AI comes with an empty list of models. You can always check applied modes by issuing the following request

GET

http://[EC2-INSTANCE-PUBLIC-IP]:8080/v1/models

As a response, you'll get something similar to this

{

"object": "list",

"data": []

}

Now, let's add/apply the BERT model, as we want to use text-embeddings-ada-002 in our case.

First, let's apply the model:

POST

http://[EC2-INSTANCE-PUBLIC-IP]:8080/models/apply

with the following JSON body:

{

"url": "github:go-skynet/model-gallery/bert-embeddings.yaml",

"name": "text-embedding-ada-002"

}

Response:

{

"uuid": "2067d79c-5944-11ee-a290-0242ac130002",

"status": "http://[EC2-INSTANCE-PUBLIC-IP]:8080/models/jobs/2067d79c-5944-11ee-a290-0242ac130002"

}

You can check the status of a "Job" by issuing REST GET request on a link that you received in response.

Also, you can monitor/check /models folder in your LocalAI EC2 instance. Or eventually, if the Job is done OK, you can repeat REST GET request to List models and this time List data won't be empty.

GET

http://[EC2-INSTANCE-PUBLIC-IP]:8080/v1/models

And response is

{

"object": "list",

"data": [

{

"id": "text-embedding-ada-002",

"object": "model"

}

]

}

Now let's test if this is working

POST

http://[EC2-INSTANCE-PUBLIC-IP]:8080/v1/embeddings

with the following JSON body:

{

"input": "Test",

"model": "text-embedding-ada-002"

}

Response:

{

"object": "list",

"model": "text-embedding-ada-002",

"data": [

{

"embedding": [

0.009794451,

0.025233647,

-0.030652339,

0.054846156,

...

-0.05938596

],

"index": 0,

"object": "embedding"

}

],

"usage": {

"prompt_tokens": 0,

"completion_tokens": 0,

"total_tokens": 0

}

}

The only thing that I didn't like is the response time which was ~ 21.03 seconds!

References

Installation of Docker & Docker Compose on EC2 instance is described following this tutorial: